Our goal

To increase + open up new vectors of progress in the field of computing by bringing the cost of AI hardware down by >1000x

Our current mechanisms for training AI systems utilise a narrow set of algorithms and hardware building blocks, which require significant capital to develop and manufacture. The combination of this significance and scarcity has far-reaching economic, geopolitical, and societal implications.

We see an opportunity to draw inspiration from natural processing systems, which innately process complex information more efficiently (on several orders of magnitude) than today's largest AI systems.

Unlocking a new technological lever for next-generation AI hardware, alleviating dependence on leading-edge chip manufacturing, and opening up new avenues to scale AI hardware – an industry which is worth trillions of pounds.

Pillars

This programme is split into three pillars, each with its own distinct objectives.

Charting the Course

Developing software simulators to help the research community map the expected performance/power/cost for any future combination of algorithm, hardware, componentry, and system scale.

Advanced Networking and Interconnect

We know the movement of data has become as critical as raw computational power, so we’re funding two projects to interrogate system-level and advanced network design opportunities.

New Computational Primitives

Developing new technologies with the potential to open up new vectors of progress for the field of computing, with a targeted relevance for modern AI algorithms.

Explore the funded projects

We're funding 13 teams bringing expertise across three critical technology domains and a strong institutional mix, to pull novel ideas to prototypes and into real-world applications.

Meet the programme team

Our Programme Directors are supported by a core team that provides a blend of operational coordination and highly specialised technical expertise.

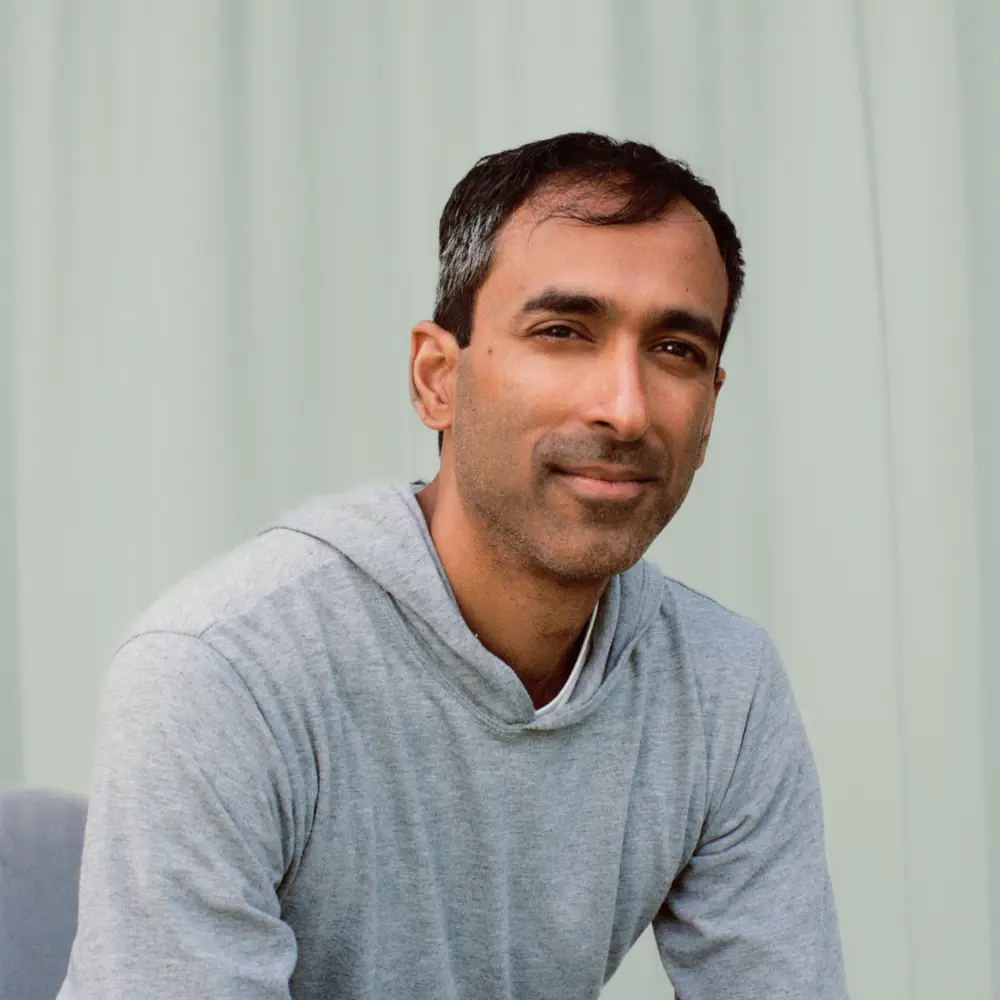

Suraj Bramhavar

Programme Director

Suraj is an electrical engineer. His work focuses on how we can redefine the way computers process information to build dramatically more efficient computers. Suraj joined ARIA from Sync Computing, where he was co-founder and CTO, which optimises the use of modern cloud computing resources.

David Stringer

Programme Specialist

David trained as a chemist at UCL before working in materials science at Imperial College London, developing nanocarbon devices for sensing, photovoltaics, and energy storage. Prior to ARIA, he built sales and operations functions at early-stage startups focusing on physics-based software for automotive and consumer electronics industries. David supports ARIA as an Operating Partner from Pace.

Paolo Toccaceli

Technical Specialist

Paolo is an electronic engineer by training, and has spent the majority of his professional career in technical R&D roles for large high-tech companies, such as HP and Alcatel-Lucent. He returned to academia to earn a PhD in Machine Learning, then joined Graphcore, a startup that develops innovative AI hardware.

Featured insights

Natural computation in single-celled microorganisms

ARIA Substack

As a Nature Computes Better opportunity seed Creator, Kirsty and her team are working to better understand single-celled microorganisms. We caught up with Kirsty to learn more about how her research is developing.

Sign up for updates

Stay up-to-date on our opportunity spaces and programmes, be the first to know about our funding calls and get the latest news from ARIA.