Nature Computes Better

Opportunity seeds support ambitious research aligned to our opportunity spaces. We’re looking to challenge assumptions, open up new research paths, and provide steps towards new capabilities.

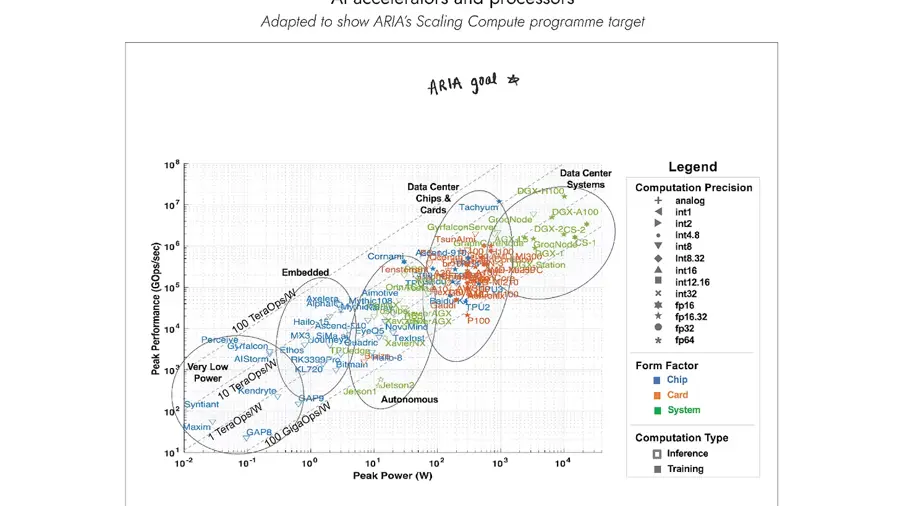

Can we redefine the way computers process information by exploiting principles found ubiquitously in nature?

From unravelling the basis of natural computation in single-celled organisms to demonstrating a commercially viable probabilistic processor, we're funding an array of projects, with up to £500k each, across individual research teams, universities and startups to maximise the chance of breakthroughs.

Meet the Creators

Two-Point Neurons-Inspired Economic and Ethical Neuromorphic Co-Design

Ahsan Adeel, University of Stirling

Relating thought to pyramidal two-point cells, Adeel is aiming to develop a new form of AI chip that is economical and, when guided by its owners’ needs and values, will empower individuals to make more informed judgements.

“There is convergent validity among recent cellular neurobiological discoveries, high-resolution modelling, and biologically plausible simulations that pyramidal two-point cells operate in different modes. These modes include slow-wave sleep, the typical wakeful state (common sense), and imaginative thought. This suggests that cellular mechanisms could be embodied in machines to enable cognitive capabilities that are effective and economical.”

Cell Learning for Natural Computing

David Jordan, Independent researcher

By combining theory, experiments, and engineering – as well as shifting our thinking from a genome centric to a metabolism centric point of view – David seeks to understand how cells use networks of macromolecules to learn, adapt, and improvise.

“Looking to biology for inspiration, to physics for rigour, and to technology for applications has proved to be a very fruitful path to progress. One of the main goals of my research is to understand how biological systems manage to organise themselves such that the natural unfolding of their dynamics, as dictated by the laws of physics, provides computations useful for prediction, adaptation, and control.”

Physically-Reconfigurable Computing: Learning How To Learn

Neil Gershenfeld, Massachusetts Institute of Technology

This work aims to revisit the foundations of computing, by enabling software to change the construction of its hardware (and vice versa). The project will target machine learning systems that can grow and evolve their own architecture based on workloads, much like biological systems.

“This project on learning how to learn with physically-reconfigurable computing systems picks up where John von Neumann left off with self-reproducing automata, and Alan Turing left off with morphogenesis, both at the end of their careers thinking about the physical form of computation. I'm grateful to ARIA for the chance to tackle an ambitious goal we think we can achieve, but aren't certain in advance exactly how to do it, something that's increasingly hard to do with incremental research funding.”

(Bio)active Matter Based Computation

Juliane Simmchen + Kimia Witte, University of Strathclyde

Juliane and Kimia are aiming to engineer miniature vehicles that respond to external stimuli, each exhibiting specific behaviours that correspond to symbolic representations.

“Modelling probabilistic processes on computers designed for deterministic calculations is highly inefficient, yet the behaviour of the underlying devices is rich with randomness — all we need to do is harness it. Our team is motivated to build a high performance, practical product for true random number generation at-large-scale, simply by getting creative with how we drive known devices and commercial hardware. Coupled with DSV’s established venture building approach, the Nature Computes Better philosophy fits this ambition perfectly.”

Probabilistic Computing with Magnetic Tunnel Junctions

Shannon Egan, Brock Doiron + Ashraf Lotfi, Deep Science Ventures

Investigating the controllability of stochastic switching in magnetic tunnel junctions, the team are developing protocols to drive these memory devices as ultra-high-speed, high-quality hardware-based true random number generators.

“Beyond simply drawing inspiration from nature to engineer novel technologies, we need to take a step further and begin co-engineering with it.”

Embodied Cognition in Single Celled Organisms

Kirsty Wan, University of Exeter

Kirsty is aiming to unravel the basis of natural computation in single-celled organisms through an interdisciplinary approach combining bioimaging, live-cell experiments, and modelling, to study how single cells compute using physical attributes of its body and the environment.

“We can learn so much from how living systems compute at all scales, and there are distinct challenges of ‘being alive’ that make these systems so much more robust and flexible than non-living architectures.”

Analog and Digital Representation of Distributions of AI Computations

Phillip Stanley-Marbell, Signaloid

As large language model workloads scale, their compute requirements are outstripping the capabilities of the substrates on which they run. Phillip asks if architectures capable of natively representing probability distributions could serve as radically more efficient computing substrates for AI workloads.

“Computation plays an important role in all aspects of society. There is currently a missed opportunity to use an understanding of the physical world to design efficient computing systems that interact with nature.”

Creating Scalable Manufacturing for Optical Computing

Martin Booth, University of Oxford

Glass is a promising substrate for next-generation optical computing paradigms – Martin is aiming to address a critical aspect of technology deployment, which is the ability to manufacture at scale.

“There is an urgent need for technology advancement in this area and there are many impressive ideas about how optical methods can be used to improve future computing technology.”

Brain-inspired polychromic spatially embedded neuromorphic networks with unprecedented memory

Danyal Akarca, Imperial College London

Danyal is combining biologically-inspired neural networks with neuromorphic hardware to test out the idea that delays between spatially embedded neurons, rather than slowing down computation, endows networks with significantly elevated computational capacities at a relatively cheap cost.

“Computation in the brain is much more complex than just tuning synaptic weights. I think we can learn a huge amount from where (in space) and when (through time) computation happens in the brain, and translate these learned principles to new efficient artificial intelligence architectures.”

Lossy Computational Models

Viv Kendon + Susan Stepney, Universities of Strathclyde and York

Viv and Susan will develop natural computational models taking into account what we can build in the lab, and the natural properties of photons, including that they aren’t conserved (massless bosons). Such a model will include photon loss – and gain – as features, not bugs.

“Instead of trying to force photons into computational models designed for matter (fermions), building models based on their intrinsic properties should give us the optimal ways to use photonics for computation, because we won’t be wasting resources to make the system do things that are hard/expensive.”

Two-Point Neurons-Inspired Economic and Ethical Neuromorphic Co-Design

Ahsan Adeel, University of Stirling

Relating thought to pyramidal two-point cells, Adeel is aiming to develop a new form of AI chip that is economical and, when guided by its owners’ needs and values, will empower individuals to make more informed judgements.

“There is convergent validity among recent cellular neurobiological discoveries, high-resolution modelling, and biologically plausible simulations that pyramidal two-point cells operate in different modes. These modes include slow-wave sleep, the typical wakeful state (common sense), and imaginative thought. This suggests that cellular mechanisms could be embodied in machines to enable cognitive capabilities that are effective and economical.”

Cell Learning for Natural Computing

David Jordan, Independent researcher

By combining theory, experiments, and engineering – as well as shifting our thinking from a genome centric to a metabolism centric point of view – David seeks to understand how cells use networks of macromolecules to learn, adapt, and improvise.

“Looking to biology for inspiration, to physics for rigour, and to technology for applications has proved to be a very fruitful path to progress. One of the main goals of my research is to understand how biological systems manage to organise themselves such that the natural unfolding of their dynamics, as dictated by the laws of physics, provides computations useful for prediction, adaptation, and control.”

Meet the programme team

Suraj Bramhavar is an electrical engineer. His work focuses on how we can redefine the way computers process information to build dramatically more efficient computers. Suraj joined ARIA from Sync Computing, where he was co-founder and CTO, which optimises the use of modern cloud computing resources. The company was spun-out from his research at MIT Lincoln Laboratory. Suraj previously worked at Intel Corp, helping transition silicon photonics technology from an R&D effort into a business now worth over $1BN.

"Increasingly, the way we process information in the digital world is colliding with the way our natural world functions. This interdisciplinary space provides a unique opportunity to both uncover insights for improving the performance of modern computers, and also to provide an entirely new lens by which to understand/engineer nature."

Meet the programme team

Paolo is an electronic engineer by training, and has spent majority of his professional career in technical R&D roles for large high-tech companies, such as HP and Alcatel-Lucent. He returned to academia to earn a PhD in Machine Learning, then joined Graphcore, a startup that develops innovative AI hardware.

Meet the programme team

David trained as a chemist at University College London before transitioning to work in materials science at Imperial College London, where he developed nanocarbon devices for sensing, photovoltaics, and energy storage. Prior to joining ARIA, David built sales and operations functions at early stage startups, focusing on physics-based software for the automotive and consumer electronics industries. David supports ARIA as an Operating Partner from Pace.

Find out more

The UK's ARIA is searching for better AI tech, ft Suraj Bramhavar

IEEE Spectrum — Fixing the Future podcast

Listen now