Safeguarded AI

Backed by £59m, this programme aims to develop the safety standards we need for transformational AI.

Our goal

Our goal is to usher in a new era for AI safety, allowing us to unlock the full economic and social benefits of advanced AI systems while minimising risks.

Why this programme

As AI becomes more capable, it has the potential to power scientific breakthroughs, enhance global prosperity, and safeguard us from disasters. But only if it’s deployed wisely. Current techniques working to mitigate the risk of advanced AI systems have serious limitations, and can’t be relied upon empirically to ensure safety. To date, very little R&D effort has gone into approaches that provide quantitative safety guarantees for AI systems, because they’re considered impossible or impractical.

What we’re shooting for

By combining scientific world models and mathematical proofs we will aim to construct a ‘gatekeeper’, an AI system tasked with understanding and reducing the risks of other AI agents. In doing so we’ll develop quantitative safety guarantees for AI in the way we have come to expect for nuclear power and passenger aviation.

Technical areas

This programme is split into three technical areas (TAs), each with its own distinct objectives.

Scaffolding

We can build an extendable, interoperable language and platform to maintain formal world models and specifications, and check proof certificates.

Machine Learning

We can use frontier AI to help domain experts build best-in-class mathematical models of real-world complex dynamics + train verifiable autonomous systems.

Real-World Applications

A safeguarded autonomous AI system with quantitative safety guarantees can unlock significant economic value when deployed in a critical cyber-physical operating context.

Funding call: Technical Areas 1.2 + 1.3

For TA 1.2, we are looking for Creators to develop the computational implementation of the theoretical frameworks being developed as part of TA 1.1 (the 'Theory'). This implementation will involve version controlling, type checking, proof checking, security-by-design, flexible paradigms for interactions between humans and AI assistants, among others.

For TA 1.3, Creators will work on the 'Human-Computer Interfaces' that facilitate interaction between diverse human users and the systems being built in TA 1.2 and TA 2 (‘Machine Learning’). Examples of HCI use cases include AI assistants helping to author and review world models and safety specifications, or helping to review guarantees and sample trajectories for spot/sense-checking or more comprehensive red-teaming.

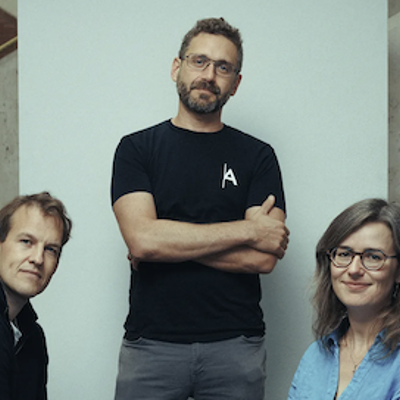

Meet the programme team

Featured insights

Yoshua Bengio joins Safeguarded AI as Scientific Director

We're excited to welcome Professor Yoshua Bengio as Scientific Director for Safeguarded AI, supporting the work led by Programme Director ‘davidad’ Dalrymple.

As Scientific Director, Yoshua will work with the Safeguarded AI team and our R&D Creators, providing scientific and strategic advice across the full programme, with a particular focus on TA3 and TA2.